One of the factors making cloud security inherently challenging is the interplay between each major cloud provider’s platform evolution (fast!), and the adoption and innovation journey of their countless customers (non-linear, often slow!). While the big clouds evolve, adding new features and capabilities, their customers are simultaneously innovating and changing how they leverage cloud technologies to deliver business value. This dynamic leads to mistakes, visibility gaps, and other complexity-driven risks that pose major challenges for even the best cloud security teams.

Cloud is quite humbling from a security perspective – it’s incredibly easy for even the most sophisticated teams to fall victim to simple misconfigurations, let alone novel, complex attack vectors, and unpredictable bad actors. Much like with disaster recovery plans, cloud security response plans should be regularly reviewed and tested. So it makes sense to go through a relatively extensive, cross-team exercises involving security, infrastructure, operations, and compliance. One of the biggest reasons to do this is to help highlight gaps in logging, or policy posture, or IAM security, or even good ole fashioned processes and procedures.

Using a ‘tabletop exercise’ format is a great way for an organization to do this and test how it responds to a variety of relevant security events. I put together some example attacks that could be used as part of exercises to test your visibility, log availability, and other aspects of your detection and response plan. These examples won’t apply to all environments, of course, but it’s still valuable as to determine how well prepared your organization is to respond to events like these, and help you fill in the gaps.

Azure Storage Access-key ransomware attack

A current vendor with guest contributor access to most of the company subscriptions had their account compromised via a phishing attack. The attacker accessed the Azure environment and identified storage accounts containing sensitive data. To be compliant with certifications like ISO 27001, CIS, GDPR, and others the customer had their data encrypted at-rest using Microsoft managed keys. After finding an orphaned Key Vault in a non-prod subscription, the attacker created a new key. They then modified each target storage account to use a customer managed key, which was the newly created key. Once the data was encrypted they deleted the key from the vault.

Notes: This is a great example of how important it is to scope user roles, especially guest users, to have least-privilege access to necessary resources. By being a Contributor in multiple subscriptions the attacker was able to find an obscure Key Vault, create a key, and then use that key to encrypt sensitive corporate data. Having the guest user’s permissions scoped to purposeful, built-in roles on very specific subscriptions would have prevented the attacker from being able to encrypt the data.

Aside from scoping down user privileges to things like key vaults, you should also make sure to leverage Azure policies to block unauthorized encryption operations and key vault operations. Even with these policies in place – and especially without them – it’s also important to make sure you have the visibility to discover the problem in the first place.

Malicious VNet peering

An employee was on Reddit to find an answer to a cloud networking issue and inadvertently made public the id of an internal VPC. An attacker saw this and used the VPC id to make a VPC peering request. The peering request looked legitimate, as there had never been any illegitimate peering requests to date, so it was accepted giving the attacker a direct route to internal resources.

Notes: This is a great example of the spirit of these exercises: it would require a few layers of security to fail, but at the same time, it helps your team think through how they handle VPC peering requests and inventory what peering configurations already exist, which ultimately helps you improve your security posture over time.

Compromised Terraform files

A company terminated the employment of an individual with corporate cloud platform access. The employee wasn’t off-boarded correctly and still had programmatic access to the corporate AWS environment through Terraform and a working API access key. The ex-employee runs ‘terraform destroy’ locally, either inadvertently or as a malicious actor, and removes corporate cloud resources causing customer facing outages and a barrage of application alerts.

Notes: Outside of the stark reminder about the importance of a robust process for user off-boarding, this one really highlights the critical role infrastructure resiliency can play in responding to an event. This means it’s really important you’re leveraging the resilience options built into AWS like S3 bucket versioning, object locks, or global replication. The idea is to make sure your persistently stored data is versioned, replicated, and available in more than one region.

Vulnerable MS SQL Server running on EC2

The company has a large number of MS SQL Servers running on EC2 instances across multiple AWS accounts. Many of the instances are setup for public access and feature poorly configured databases. An attacker is able to brute force an SQL login and take advantage of an impersonation chain and download a malicious payload one of the host machines.

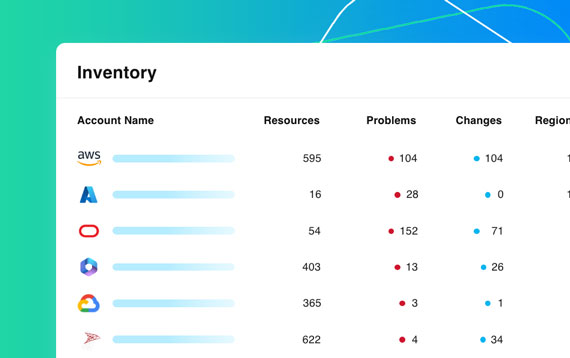

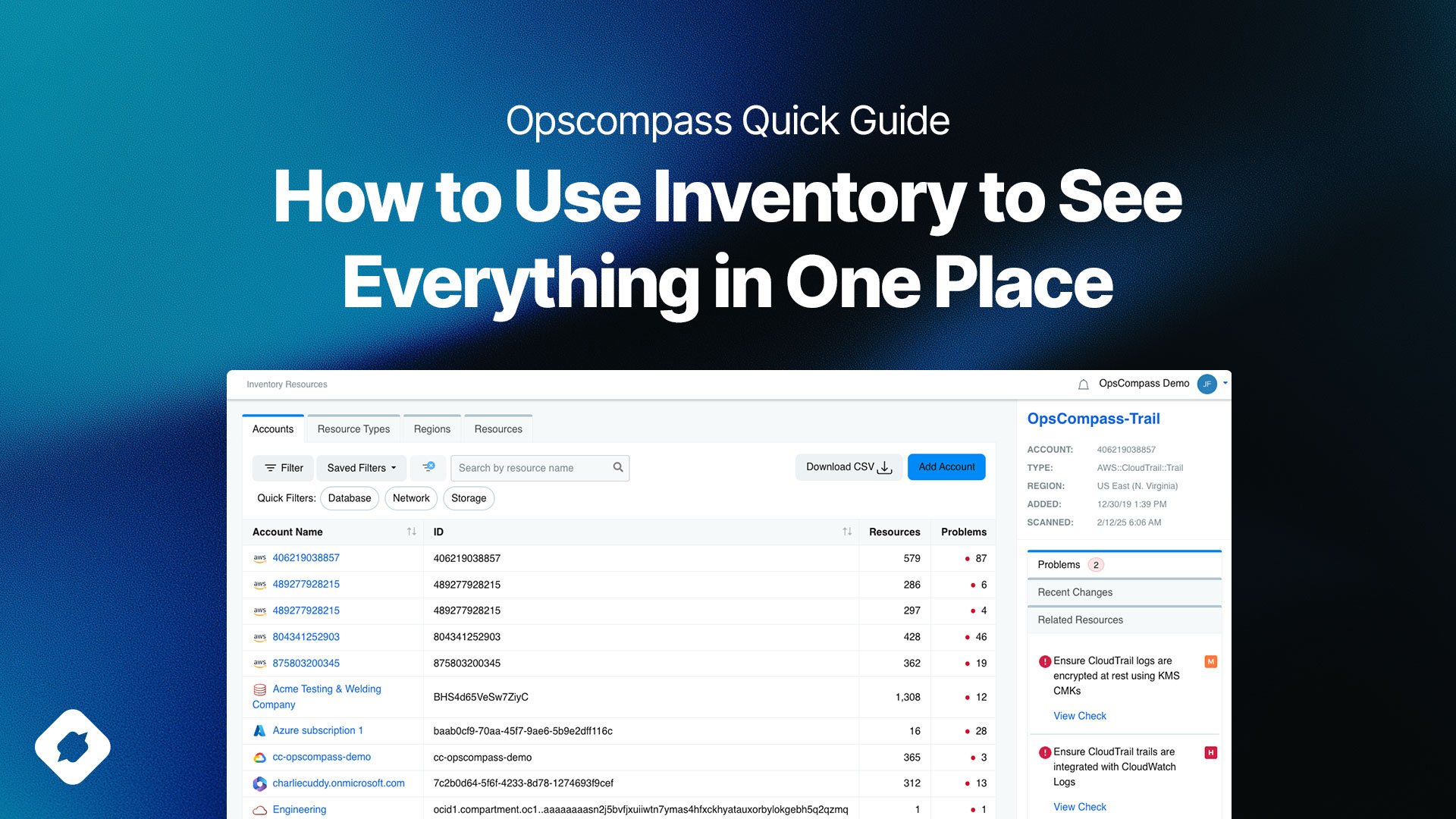

Notes: You should definitely maintain an inventory of your databases and datastores if you are not already. Being able to easily audit your databases to ensure patch levels and verify that the surrounding infrastructure is configured correctly is critical. In this situation, we see poorly configured databases in addition to poorly configured infrastructure. Across multiple clouds it can be even more of a challenge to establish the necessary visibility.

Mysterious resource

A c7g.8xlarge EC2 instance was discovered in a typically unused region on a non-production account. The machine was named “dev-deleteme” and has been consistently running at 100% CPU. No one on the dev team was aware of that virtual machine. After some investigation, it was determined that the instance was being used to mine crypto.

Notes: This situation has been fairly common in recent years and presents a great opportunity to make sure you have the visibility to discover problems like this faster. The other side of it is it provides an impetus to expand your service control policies and scope down the amount of active AWS regions in your organization.

Conclusion

As I mentioned before, these scenarios don’t apply to every environment and they also represent multiple layers of failure. These exercises are great for finding areas where you should be capturing logs, or could implement a policy gate to mitigate a risk. With the prevalence of compromised or leaked credentials in data breaches, it’s not only important to take a Zero Trust approach to user privilege’s, but it’s also critical that you have enough visibility to know you have a problem.

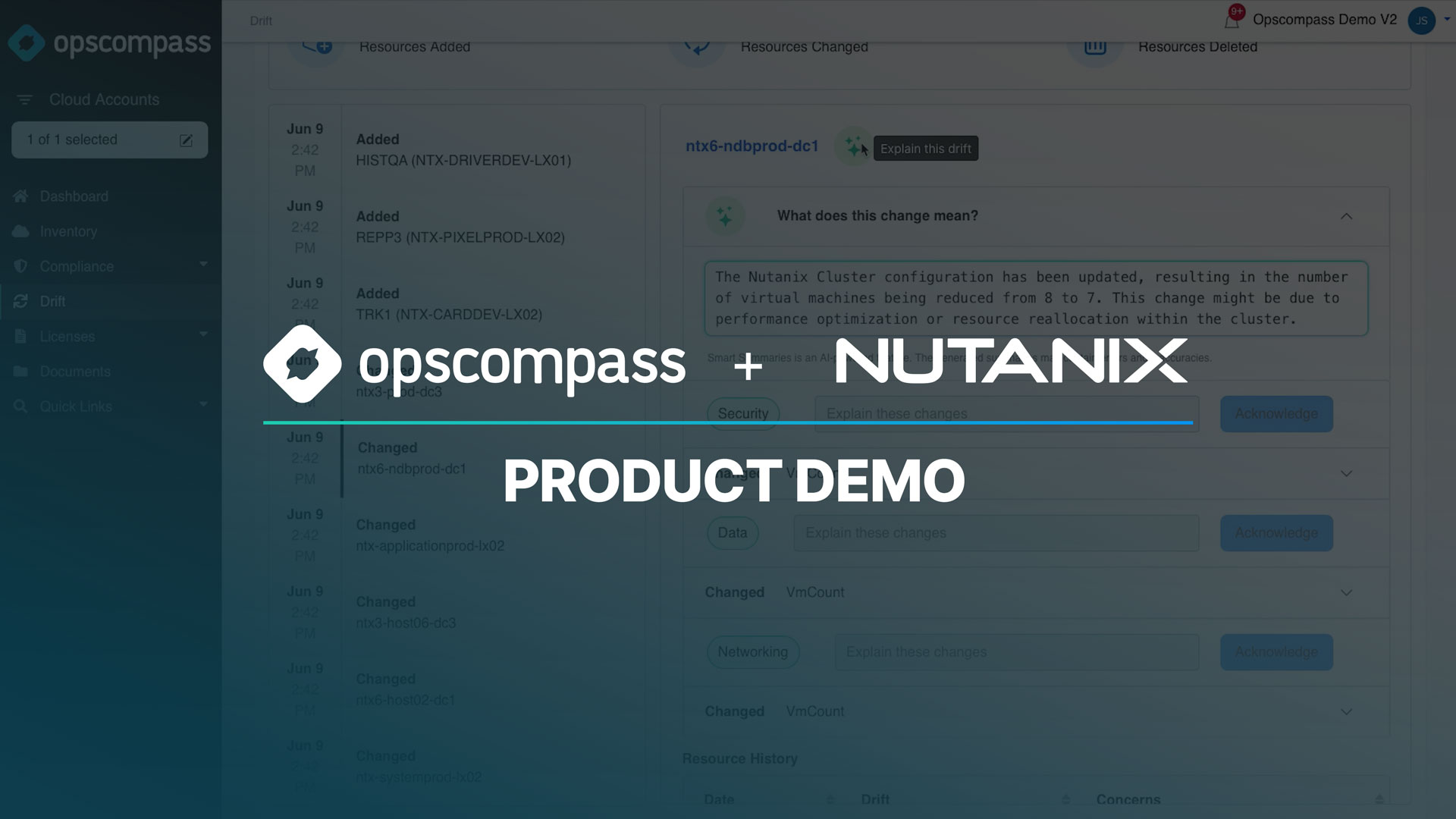

The original release of OpsCompass was focused on detecting configuration drift and helping our customers understand how their environments are changing. One of the things I’ve learned over the years is that no matter how sure customers are of their own security/management/governance program, they’re constantly dealing with things they didn’t predict. Adequately protecting your mission critical data, databases, and applications requires being very intentional about having visibility and intelligence into your critical assets.