It is useful to go back in time in order to move forward

Let’s go back several years when all we had to worry about was an on-premise data center. The basic resources: Compute, Storage, and Network were literally an arms length away. All we had to do was put a big fence (Firewall) around it and we could effectively control ingoing and outgoing traffic. As applications became more sophisticated and distributed, we built up teams that effectively controlled accesses to higher-level abstractions of the core resources (i.e. Network, Database and VM’s). In order to get anything done, you had to negotiate the permissions and communications of each team to put an application into production. While an extremely slow process, it effectively created an artificial security layer.

Now along comes Digital Transformation. The traditional ways were too slow, restrictive and costly to facilitate transformation. In order to compensate Agile, DevOps and the public cloud became the standards. Now we’re cooking with gas, we are lightning-fast, we have all of the parts integrated into a pipeline and seemingly infinite access to compute, storage and network in the cloud. We are so efficient, a single developer can create code, push it through a pipeline into production and then later market the service to the end-users. This is great, Right?

Well yeah, but we lost some things along the way.

- Security – we have plenty of gates. Agents everywhere, embedded in code or monitoring VM’s. If that doesn’t cover it, don’t worry we have templates to inherit from that define all of the required security policies. Surely no one would ever modify a template, they are always exactly what we need, right?

- Compliance – we have a people process that has established all of the templates and governance several years ago. I am certain that we are still adhering to these same policies, besides, nothing has changed since we signed off on it, right? What about the negotiation with the database group to create a stored procedure, while terribly inefficient in today’s view, it was another set of eyes that assured compliance.

- Cost – developers can request VM’s and resources without going through that messy capital expenditure process to procure new hardware and wait until it is installed in 6 months. Besides what could happen if I turn on a service, forget to turn it off and let it run over the weekend. It won’t cost too much, will it?

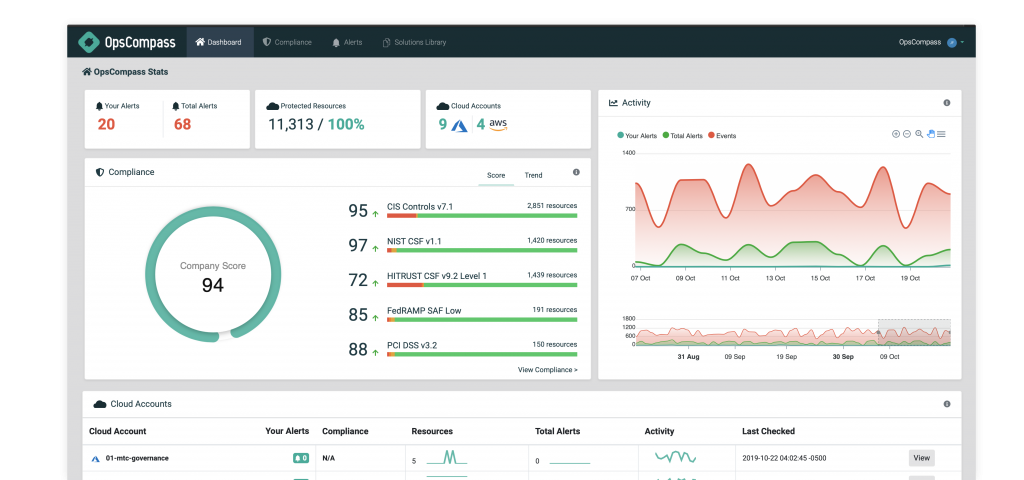

The right information at the right time, with real-time visibility into what’s happening, what needs your attention, and what to do next across multi-cloud environments.

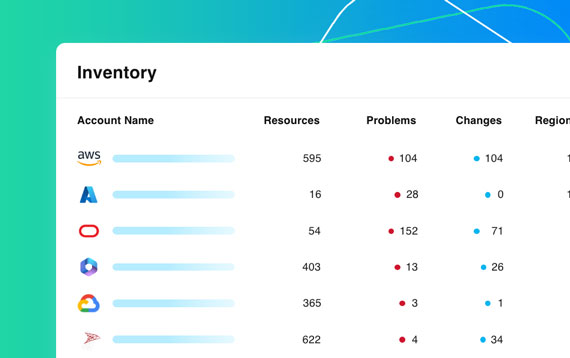

We at OpsCompass think there is a better way. By monitoring all of the events of the underlying platform (i.e. Azure, AWS and GCP), developers can continue to move at DevOps speed, use templates and request resources safely.

OpsCompass captures the events from the platform and will continuously baseline the changes against acceptable risk. When an alert meets a threshold we can route the notification to the group most impacted by the alert. Once the team receives the notification, we helpfully show the specific change that was made, offer a remediation process and audit all of the changes. This works for security principles, compliance or cost issues and why we focus on these common denominators.