This article is part of our State of Cloud Security 2021 Series which will interview a diverse mix of cloud security experts, design-makers, and practitioners with a goal of better understanding their perspectives on the current state of and future of cloud security.

The following is an interview OpsCompass CTO, John Grange recently had with Herman Boma, Cloud & Big Data Consultant, Impact Makers.

JG: What is the state of cloud security today?

HB: As cloud adoption continues to grow and organizations move more and more applications to the cloud, we will see enterprises increasingly embrace models that support multiple clouds. Today it is evident that the cloud model is not about just one cloud but the ability to adopt numerous clouds. Cloud-native technologies are becoming mainstream, and their deployments are maturing and increasing in size. This cloud-native shift means organizations are building complex applications, deploying and managing them quickly, with more automation than ever before. It’s also clear from Cloud Native Computing Foundation (CNCF) seventh annual survey that core technologies such as Kubernetes have been proven, and there is widespread adoption across enterprises. All these trends have significant ramifications for cloud security.

As multi-cloud strategies become fully mainstream, companies will need to figure out how to apply consistent approaches to securing their infrastructure and do it efficiently. Cloud-native technologies will require companies to rethink how we implement and deploy security policy.

JG: What are the most common challenges organizations face when it comes to cloud security today?

HB: Mental Model Shift

The biggest challenge I see is that most organizations aren’t adequately prepared for cloud security because of the failure to transition their mindset from the legacy data center security model into the cloud-native mindset. Too often, organizations migrate existing, on-premise applications into the cloud without making this shift in mindset/mental model, as a result forcing outdated abstractions and security models into the cloud. Let look at a few examples of this

From high-trust security to zero-trust security

Traditional security was based on a “high-trust” world enforced by a strong perimeter and firewall. One can think of this as castle walls around the organization, and everything inside the castle is good and needs to be protected. Unfortunately, this model of thinking is not sufficient for our new context as the cloud is a “low-trust” or “zero-trust” environment with no clear or static perimeter. In a cloud-native environment, although the network perimeter still needs to be protected, the perimeter-based security approach is no longer sufficient. Hence organizations must shift their focus from perimeter-based security approaches with internal communications considered trusted to a Zero-trust security mindset where service-to-service communication is verified, and trust is no longer implicit but explicit.

From fixed IPs and hardware to Dynamic cloud infrastructure

In a traditional security model, applications were deployed to specific machines, and the IP addresses of those machines hardly changed. This meant that our security tooling could rely on a relatively static and predictable environment and identities to enforce policies using tools such as firewalls with IP addresses as identifiers. However, with dynamic cloud infrastructure, shared hosts, and frequently changing jobs, using a firewall to control access between services doesn’t work. Therefore, organizations can’t rely on the fact that a specific IP address is tied to a particular service. As a result, there must be a shift in mindset about the locus of identity in cloud-native environments from the host (IP address or hostname) to application-based identity. And this shift carries massive implications for how applications are secured in the cloud.

Conclusion:

In the same spirit that organizations are adopting cloud-native principles as they migrate and modernize their legacy applications to take full advantage of the cloud, they must embrace cloud-native security principles to properly secure their application in the cloud.

JG: What lessons can be learned from the biggest cloud-related breaches of 2020?

HB: Beware of “root cause”.

In the aftermath of a security breach, there is often immense pressure to come up with reasons and recommendations. This pressure usually from senior management to figure out what happened can often translate into pressure to reach superficial and easy explanations. Consequently, organizations focus narrowly on a single root cause of a breach, such as an admin failing to properly configure an S3 bucket or the failure to properly implement as a control. Ultimately this might result in false quick fixes and countermeasures such writing extra security policies to deal with the latest “hole” uncovered by the security incident. In some cases firing people, ”bad apple”, involved in the incident, etc.

The first key lesson here is that post-breach attribution to a “root cause” is fundamentally wrong. There are usually multiple causes for security breaches and incidents. Each of them is usually necessarily insufficient in itself to create an accident. Only together are these causes sufficient to create an incident. It is generally the confluence of these causes together that creates the circumstances required for the incident. Even when you can establish clearly that an employee misconfigured an S3 bucket which led to a data breach, that is only the beginning of truly understanding what happened and not the end. James Reason puts it this way “Error are consequences not causes: …errors have a history. Discovering an error is the beginning of a search for causes, not the end. Only by understanding the circumstances…can we hope to limit the chances of their recurrence.”

Security must be embedded

Security should be implemented by design, rather than as an afterthought delegated to third-party software. Hence organization must embed comprehensive security monitoring; granular risk-based access controls; and system security automation throughout all facets of the infrastructure in order to focus on protecting critical assets in real time within a dynamic threat environment. When infrastructure, compute, network and storage are all delivered “as-code,” so too can security be delivered “as code.” This means embedding security policy within apps, between services, within and around Kubernetes and even on the cloud platforms themselves. Cloud-native requires us to rethink how we implement and deploy security policies.

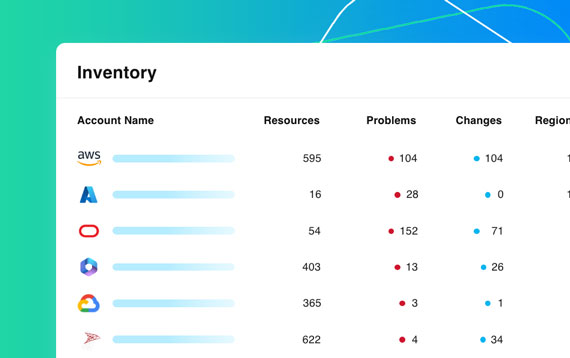

Poor visibility leads to slow detection

Everybody seems to have caught up to the idea that we should log everything . Even AWS turns on cloudtrail by default. But just turning on logging is not going to help you that much . If logs are not actively used to gain visibility ,context and understanding then security visibility will remain foggy and detection speed will be slow. Even the most well orchestrated cyber attacks can be repelled or mitigated if they can be detected in a timely fashion. Organizations seem to always be operating in two extremes either their drowning in security event data or they have very little .Either way most are blind because visibility doesn’t come from the volume or velocity of data they gather but from their active investment in turning it into actionable useful sense making tools. Logs are only the raw material but can be refined to tell a comprehensive story for what is changing across that infrastructure in real time.

JG: What are 3-5 pieces of advice for organizations looking to improve their cloud security in 2021?

HB: Security as code

It is surprising how many organizations develop fancy security best practices, security controls, etc., that at best are partially and inconsistently applied across the organization. As these policies, control, and best practices increase, the chasm between good intention and desired state is becoming even wider. On the other hand, failures from ineptitude- by which I mean the knowledge exists, yet we fail to apply it correctly and consistently are on the rise in the cloud. A typical example that comes to mind is cloud misconfiguration errors which remain the number one cause of data breaches. A good number of these breaches can be tied back to error when configuring these cloud services as opposed to security flaws in the services themselves. These misconfigurations do not generally result from a lack of knowledge of best practices but a failure to apply common knowledge with precision, consistency, and scale. So instead of trying to enforce security through checklists, point-in-time audits, outdated policy documentation, labor-intensive and subjective security reviews, organizations must encode and enforce this knowledge as code. Organizations must move their policies out of documentation and into code which allows for the automation of checks and balances, remove arbitrary interpretation and assessments from reviews, and supply immediate results for engineers. In practice, this would be wired into configuration management tools and the Continuous Delivery pipeline as embedded guardrails for provisioning workflow. The fundamental desire here is to achieve programmable security controls by automating security policies, controls, assessments, and enforcement across the organization to ultimately bridge the chasm between the desired state and good intentions.

Embrace Security Chaos Engineering

The second piece of advice follows closely from the last one. Assume we have automated our security best practice, security review, etc., as code, and we are consistently applying our security control with greater certainty and confidence. But six months from now, we get breached again. How is this possible after all the work we have done? What are we missing? It appears we are doing all the right things. The first fundamental thing we have to understand is that security /securing is a complex problem. Complex systems fail in unpredictable ways; hence having all the controls and automation in the world is no guarantee of security. Check-list security[regulations,audit,] is not sufficient in a complex world. It’s possible to technically be compliant with a standard and still not have a secured environment. Hackers don’t care about our control and are opportunistically looking for the path of least resistance.

Organizations must therefore embrace the reality that failure will happen. People will click on the wrong thing. Security implications of simple code changes won’t be apparent. Mitigations will accidentally be disabled. Things will break. Once this reality is accepted, organizations must pivot from trying to build perfectly secure systems to continue asking questions like “How will I know this control continues to be effective?” “What will happen if this mitigation is disabled, and will I be able to see it?”,

So what do we do?

Adopt security chaos engineering. Security chaos engineering is defined as identifying security control failures through proactive experimentation to build confidence in the system’s ability to defend against malicious conditions in production.

Through continuous security experimentation, organizations can become better prepared and reduce the likelihood of being caught off guard by unforeseen disruptions. By intentionally introducing failure modes or other events, organizations can discover how well-instrumented, observable, and resilient their systems genuinely are. They can validate critical security assumptions, assess abilities and weaknesses, then move to harden the former and mitigate the latter. Security must ultimately pivot from purely defensive postures and embrace a more resilient posture, and let go of the impossible standard of perfect prevention.

The breach is inevitable.

Organizations spend too much energy thinking about their first line of defense and not enough time thinking about what happens when that defense fails.To quote Mike Tyson “Everyone has a plan until they get punched in the mouth.” The plan has to start from there. It can’t start from this nice steady state of, “Oh, we got an S3 bucket exposed to the public ” blah-blahblah-blah-blah. Organizations must assume they are going to lose the first battle and build in the redundancies and the controls and the layers of security that allow them to lose that first battle and still win the war.

JG: What’s the future of cloud security?

HB: Security and DevSecOps will shift left as attack vectors arise

With the proliferation of new cloud-native application architectures and the migration of more apps to the cloud, we are bound to see attack vectors emerge to exploit these native cloud applications. Security will increasingly shift left to limit risk and stop mistakes as early as possible. We will see more organizations move security practices, policies, processes, and guidelines from PDFs, wiki’s, manuals e.t.c to software and policy-as-code — building security directly into software-defined platforms and CI/CD pipelines.

Zero Trust Adoption will continue to rise

As the complexity of current and emerging cloud, multi-cloud, and hybrid network environments continue accelerating, and the nature of adversarial threats evolves to expose the lack of effectiveness of the traditional security mindset. Zero Trust is quickly gaining momentum as a new security model and framework that applies a ‘never trust, always verify’ approach regarding workloads, users, and devices before granting access to an organization’s IT ecosystem and underlying data.